Defining the New Divide in Software Development

The rapid integration of Artificial Intelligence into the software development lifecycle has created a new strategic imperative for engineering leaders: mastering the divide between two distinct, often conflated, paradigms—’vibe coding’ and ‘AI-assisted engineering’. Mastering the governance of this new reality is now a core competency for effective leadership. For technical leaders aiming to harness AI’s profound benefits while systematically mitigating its significant risks, understanding this distinction is paramount to building resilient, high-performing teams.

The purpose of this white paper is to provide a clear, strategic analysis for technical leadership navigating this new frontier. It will define each approach, compare their impacts on the critical engineering pillars of quality, security, and team development, and offer actionable recommendations for governance and adoption. The goal is to equip leaders with the vocabulary and framework needed to foster innovation responsibly, ensuring that AI serves as a powerful collaborator, not an unwitting source of technical debt and vulnerability.

We will begin by establishing the foundational definitions of these two emerging methodologies, clarifying the philosophies and developer roles that distinguish creative flow from controlled collaboration.

Defining the Paradigms: From Creative Flow to Controlled Collaboration

To build a durable strategic framework, a precise, shared vocabulary is essential. Conflating the improvisational nature of vibe coding with the disciplined application of AI-assisted engineering can devalue the discipline of engineering and give teams an incomplete picture of what it takes to build robust, production-ready software. This section deconstructs the core philosophies, developer roles, and intended outcomes of both paradigms, drawing directly from the definitions provided by their proponents and key industry analysts.

Vibe Coding: The Creative Prompt-Driven Flow

Vibe coding is a software development technique centered on a high level of abstraction, where the developer guides a large language model (LLM) through natural language prompts. Technologist Simon Willison defines it most succinctly as “building software with an LLM without reviewing the code it writes.” The goal is speed and creative momentum over deep code review.

The term was coined by AI researcher Andrej Karpathy, who defined it as the process where you “fully give in to the vibes, embrace exponentials, and forget that the code even exists.” The core characteristic of this approach is accepting AI suggestions without rigorous review, focusing instead on rapid, iterative experimentation to quickly transform ideas into working prototypes. In this paradigm, the developer’s role shifts from a traditional programmer to an “architectural conductor or guide.”

AI-Assisted Engineering: The Human-in-Control Multiplier

AI-assisted engineering is an approach that builds upon traditional coding practices by incorporating AI as a powerful collaborator, not a replacement for fundamental engineering principles. It is a methodical augmentation of the software development lifecycle, where AI tools are used to accelerate tasks, but human expertise remains the final arbiter of quality and correctness.

This paradigm is defined by its requirement for “careful human-led review, validation, and refinement.” Unlike vibe coding, the human engineer remains “firmly in control” and is ultimately responsible for the security, scalability, and maintainability of the final product. The engineer is on the hook for every line of code that is committed, whether it was written by them or generated by an AI.

Key activities within AI-assisted engineering include:

- Leveraging AI for routine tasks like generating boilerplate code, assisting with debugging, and scripting deployment configurations.

- Critically assessing, refining, and strategically incorporating AI-generated suggestions into the broader codebase.

- Maintaining the professional responsibility to be able to fully explain any committed code to another team member.

With these definitions established, we can now proceed to a direct comparative analysis of their practical implications for engineering organizations.

Comparative Analysis: Strategic Implications for Engineering Teams

The strategic value of any new methodology is revealed in its real-world application. While both vibe coding and AI-assisted engineering leverage the same underlying AI technology, their divergent philosophies lead to vastly different outcomes. Leaders must evaluate how each methodology impacts their team’s performance, risk profile, and long-term success. This section systematically compares the two paradigms across four critical dimensions: appropriate use cases, impact on code quality, security posture, and team skill development.

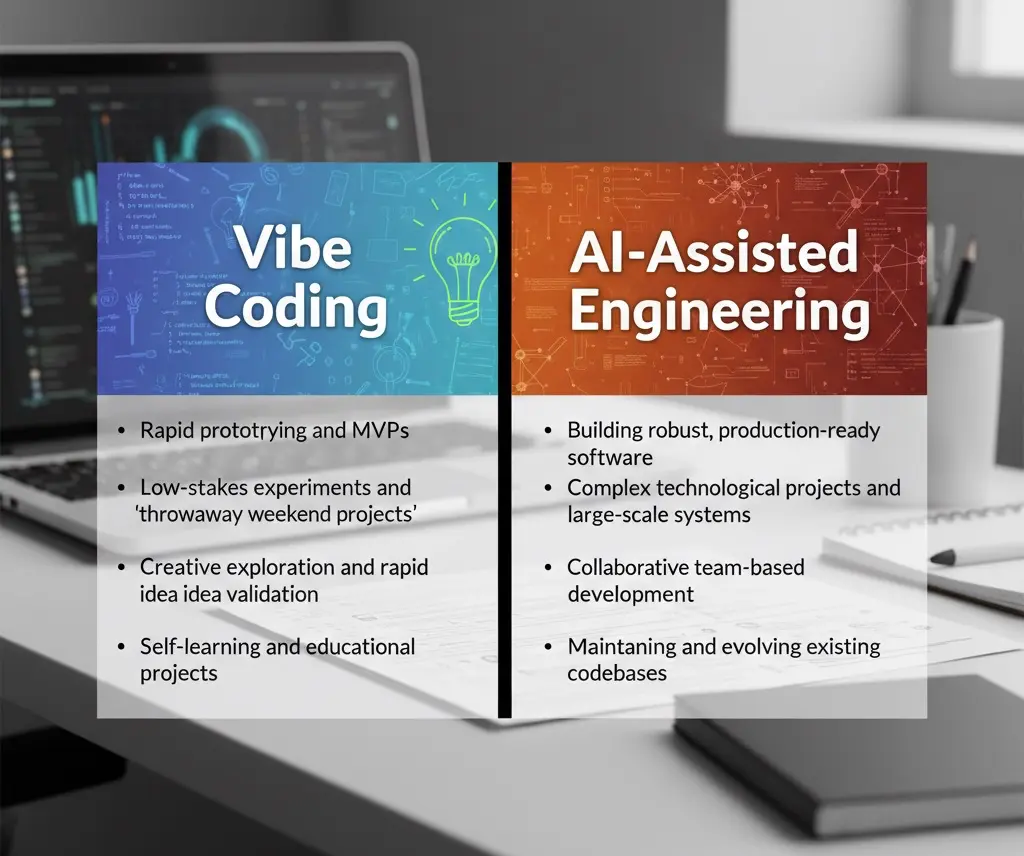

Appropriate Use Cases: Prototyping vs. Production

The most critical distinction for leadership to establish is the boundary between appropriate and inappropriate applications for each approach. Vibe coding is a powerful tool for ideation, while AI-assisted engineering is the framework for production.

Impact on Code Quality and Maintainability

Leaders must evaluate how each methodology impacts long-term code health and total cost of ownership.

In vibe coding, the primary objective is speed, which often comes at the expense of correctness and maintainability. Because code is accepted without deep review, it can quickly accumulate technical debt and, as its originator noted, “grows beyond my usual comprehension.” This leads to the “70% problem,” where an LLM can rapidly produce 70% of a working application. For a business, this final 30% represents the difference between a flashy demo and a shippable, reliable product. It is where junior engineers get stuck and where hidden maintainability costs, subtle bugs, and architectural flaws reside, requiring significant expert intervention to resolve.

Conversely, AI-assisted engineering uses human oversight as a fundamental guardrail for quality. Because the engineer is ultimately responsible for reviewing, testing, and understanding every line of code, traditional best practices for architecture, modularity, and documentation are naturally upheld. AI accelerates the process but does not circumvent the principles that ensure a codebase remains healthy and maintainable over time.

Security Posture and Inherent Risks

The security implications of each approach are stark. Vibe coding, by its very definition, introduces significant security risks by encouraging the acceptance of unvetted, AI-generated code.

The most common security risks associated with this practice include:

- Insecure Code Suggestions: AI models may generate code with fundamental security flaws, such as missing input validation, improper authentication flows, or unsafe database queries.

- Leaked Secrets: LLMs often generate placeholder credentials, such as API keys or passwords. In a rapid workflow, these placeholders can be inadvertently copied and committed to source control, exposing critical systems.

- Vulnerable Dependencies: An AI may recommend popular open-source libraries to solve a problem without evaluating them for known vulnerabilities or end-of-life status, introducing supply chain risks.

- License and IP Violations: AI can produce code snippets that resemble content under restrictive or copyrighted licenses, creating potential legal and compliance issues for the organization.

While these risks still exist in an AI-assisted engineering model, the mandatory human review process acts as a critical checkpoint. The engineer’s responsibility is to catch and remediate these potential vulnerabilities before they are integrated into the main codebase, thereby preserving the organization’s security posture.

Impact on Team Skill Development

The choice of paradigm directly impacts the construction of a resilient and adaptable engineering organization.

Vibe coding can “democratize software development” by lowering the initial barrier to entry. However, over-reliance on this method can lead to a “loss of criticality,” where less experienced engineers fail to develop deep problem-solving skills. The strategic risk is the creation of a “glass house” of engineers who can build quickly but cannot debug or maintain the systems they create, eroding long-term organizational capability.

AI-assisted engineering, in contrast, acts as a “force multiplier” for experienced developers and introduces novel mentorship models. Leaders can champion the practice of “trio programming,” where a senior engineer, a junior engineer, and an AI work in concert. In this model, the senior prompts the AI to generate a solution and then challenges the junior to explain the code—its logic, its trade-offs, and its place in the system. This actively fosters the critical thinking and deep system understanding that vibe coding can erode.

This comparative analysis highlights the need for deliberate governance to guide teams toward the responsible and productive use of AI tools.

Governance and Best Practices for AI Adoption

The challenge for technical leadership is not whether to adopt AI coding tools, but how to do so responsibly. The strategic imperative lies in establishing clear guardrails and workflows that maximize productivity gains while systematically managing the risks identified in the previous section. A successful AI adoption strategy must be intentional, moving teams beyond ad-hoc experimentation toward a disciplined, secure, and scalable model of AI-assisted engineering for all production work.

The following recommendations provide an actionable framework for governing the use of AI coding tools:

- Establish a “Human-in-the-Loop” Mandate. The foundational principle must be what technologist Simon Willison calls the “golden rule” for production-quality AI-assisted programming: no AI-generated code is committed to a repository unless the engineer can “explain exactly what it does to somebody else.” This single principle ensures accountability, enforces understanding, and prevents the accumulation of unvettable “black box” code.

- Treat all AI-generated code as a First Draft. Teams must be instructed to never assume AI output is complete or production-ready. All generated code must be subjected to the same rigorous review, testing, and refinement processes as any human-written code. This mindset shifts the interaction from blind acceptance to critical collaboration.

- Champion Spec-Driven Development. For production features, providing an LLM with a clear plan, detailed requirements, and architectural specifications yields a much higher quality outcome than open-ended, “vibe-based” prompting. Encouraging engineers to think through the problem and write a spec first improves the AI’s output and reinforces sound engineering discipline.

- Embed Automated Security Scanning into CI/CD Pipelines. To counter the inherent risk of insecure AI suggestions, automated tooling is essential. Integrating static application security testing (SAST), software composition analysis (SCA), and secrets detection directly into the CI/CD pipeline ensures that every commit—regardless of origin—is automatically scanned for common vulnerabilities.

- Develop Formal Training on Secure AI Usage. Organizations should provide clear guidance to teams on best practices. This training should cover how to craft effective and secure prompts, how to recognize common insecure code patterns generated by AI, and the official governance policies regarding approved AI tools and data handling to prevent compliance violations.

- Encourage AI as a Learning and Onboarding Tool. Beyond code generation, AI assistants are powerful educational tools. Encourage new joiners to use them to ask questions and explain complex parts of the codebase. This can significantly accelerate their ramp-up time and reduce the mentoring burden on senior engineers, creating a more efficient and supportive onboarding experience.

- Prepare for the Human Review Bottleneck. As AI dramatically increases the velocity of code generation, the human capacity for rigorous code review becomes the new system constraint. This is a strategic bottleneck that leadership must address proactively through investments in smarter tooling, specialized training in efficient review, and a redesign of workflows to handle increased throughput without sacrificing quality.

Implementing these practices will provide the structure needed to integrate AI tools safely and effectively, setting the stage for a productive, AI-integrated future.

Charting a Course for an AI-Integrated Future

The emergence of powerful AI coding tools has created a critical inflection point for the software engineering discipline. The central argument of this paper is that technical leaders must draw a clear and deliberate distinction between the exploratory, high-risk practice of vibe coding and the disciplined, high-value framework of AI-assisted engineering. Confusing the two invites technical debt, security vulnerabilities, and a degradation of engineering quality.

The key finding of our analysis is that while vibe coding offers unprecedented speed for ideation and prototyping, it is AI-assisted engineering that provides the necessary framework for responsibly integrating AI’s power into scalable, secure, and maintainable software systems. By mandating human oversight and reinforcing security best practices, organizations can harness AI as a force multiplier that enhances—rather than replaces—the judgment and expertise of their engineering teams.

Looking ahead, the line between these paradigms may blur as AI advances. The future of software development will likely involve a convergence where the rapid, creative experimentation of vibe coding is seamlessly integrated into workflows governed by the rigorous quality control of AI-assisted engineering. For leaders who establish a strong foundation of governance today, this evolution promises a future where engineers are empowered to innovate more rapidly and responsibly than ever before.