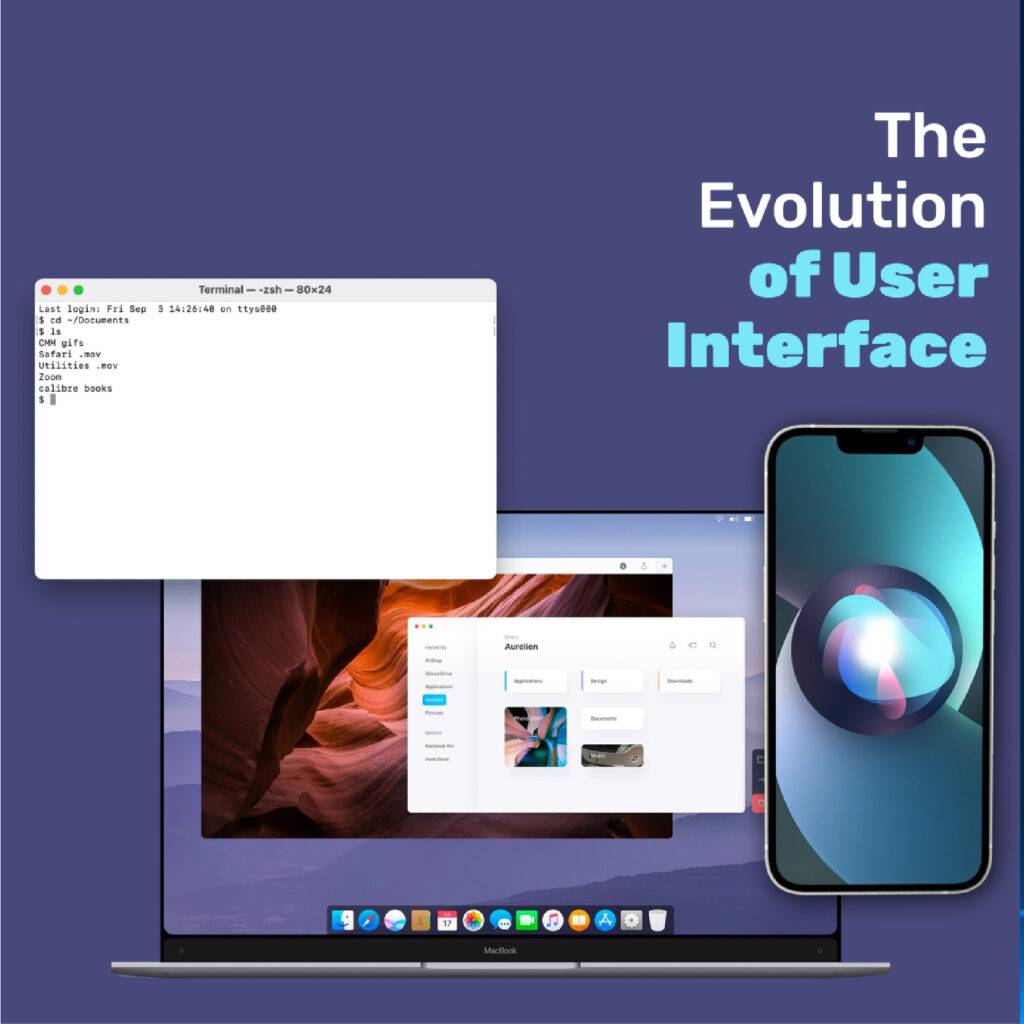

The Command Line Interface: A Humble Beginning

In the early days of computing, when mainframe machines filled entire rooms, and the concept of personal computers was still a distant dream, the Command Line Interface (CLI) served as the gateway to the digital world. Pioneering computer users interacted with these machines by typing commands through keyboards and using lines of text to communicate their intentions.

The CLI’s simplicity offered some advantages. It allowed experienced users to achieve greater efficiency and direct control over computer functions.

Complex tasks could be performed with a single command or a script, speeding up workflows. The CLI’s lower resource requirements also made it a perfect fit for devices with limited processing power and memory.

However, the CLI had its fair share of limitations. The steep learning curve made it daunting for newcomers, as the interface required memorization of commands and their syntax. This barrier often left non-technical users feeling excluded from the world of computing. The CLI also lacked visual feedback, which could lead to user errors and difficulties in interpreting the outcomes of commands. Discoverability was limited, as users needed prior knowledge of specific commands and their syntax to effectively use the system.

The Desktop Metaphor: Bridging the Gap

The graphical user interface (GUI) emerged as a solution to make computers more approachable to the general public. The desktop metaphor was a key concept in developing GUIs, providing users with a familiar and relatable environment. This metaphor translated real-world objects like folders, files, and trash bins into digital counterparts, enabling users to interact with computers using a point-and-click approach. The desktop metaphor significantly lowered the barriers to entry, making computing accessible to a broader audience.

The Mac desktop metaphor. (Image by: howtogeek.com)

The first successful implementation of the desktop metaphor was the Apple Macintosh, released in 1984. Its interface featured icons representing files, folders, and applications, all neatly arranged on a virtual “desktop.” This innovative approach allowed users to interact with their computer using a mouse, clicking and dragging objects as they would in the real world.

The Windows 3.11 desktop metaphor. (Image by: Internet Archive)

Soon after, Microsoft introduced Windows, which built upon the desktop metaphor and brought it to a broader audience. The rivalry between Mac and Windows fueled the evolution of the GUI, with each company striving to make its interface more intuitive and visually appealing. As a result, the GUI became the dominant user interface, pushing the CLI to the background and opening the door for innovations in human-computer interaction.

The GUI and the desktop metaphor journey marked a significant milestone in the evolution of user interfaces. It laid the foundation for future developments in UI design, paving the way for the mobile revolution and the rise of skeuomorphism.

From Skeuomorphism to Neumorphism: The Evolution of the touchscreen interface

As the 21st century unfolded, a new wave of technological innovation took the world by storm. Mobile phones evolved into sophisticated devices, and smartphones became ubiquitous. These pocket-sized computers introduced an entirely new form of user interface, one that had to be optimized for touchscreens and the unique constraints of mobile devices.

In the early days of smartphones, designers turned to skeuomorphism to help users navigate the uncharted territory of touchscreen interfaces. Skeuomorphism, a design philosophy that mimicked the look and feel of real-world objects, played a crucial role in bridging the gap between the tangible world and the digital realm. By creating interfaces that resembled familiar materials and artifacts, designers sought to make smartphone technology more intuitive and user-friendly.

Apple’s iPhone, released in 2007, was a prime example of skeuomorphism in action. Its interface featured icons and elements closely resembling real-life objects, such as the leather-bound calendar or the wooden bookshelf for the iBooks app. This realistic design approach provided users with visual cues to help them understand the functionality of the apps and the touchscreen interface. It played a pivotal role in easing the transition from physical buttons and keys to a fully digital, touch-based interaction.

However, skeuomorphism was not without its drawbacks. The reliance on realistic design elements sometimes led to visual clutter and inconsistencies as designers tried to balance aesthetics with usability. Furthermore, as users became more accustomed to interacting with digital interfaces, the need for such realistic design elements began to wane.

As the smartphone landscape matured, designers sought new ways to refine user interfaces and address the limitations of skeuomorphism. Minimalism emerged as a powerful design philosophy, advocating for simplicity, clarity, and a focus on essential elements. This approach departed from the ornate, realistic designs of skeuomorphism and sought to reduce visual clutter while maintaining an intuitive user experience.

With minimalism, designers embraced flat design, using simple shapes, clean lines, and bold colors to create visually striking interfaces. This modern aesthetic prioritized functionality and made it easier for users to navigate apps and websites. The minimalistic approach resonated with users, and soon, tech giants like Apple and Google adopted the style, further cementing its influence on UI design.

As minimalism took hold, a new design trend called Neumorphism emerged. Neumorphism aimed to blend the simplicity of minimalism with subtle depth and texture, creating an almost three-dimensional appearance. This design trend used soft gradients, drop shadows, and rounded edges to achieve a more tactile and immersive interface while still adhering to the principles of minimalism.

UI comparison between iOS 6 and 7 (Image by: OSXDaily)

Neumorphism sought to balance the stark flatness of minimalist design and the tactile familiarity of skeuomorphic elements. The resulting aesthetic was visually appealing and user-friendly, adapting to users’ ever-evolving needs and expectations.

The transition to simpler designs was driven by several factors, including the desire to improve usability by reducing visual clutter and adapting to users’ increasing familiarity with digital interfaces. Moreover, designers sought to create a more consistent and cohesive design language across devices and platforms, ensuring a seamless experience for users.

While visually appealing, neumorphism also presented challenges, such as reduced discoverability and potential accessibility issues for visually impaired or older users.

Conversational User Interfaces: The Rise and Fall of Digital Assistants.

As user interface design continues to evolve, the emergence of conversational user interfaces (CUIs) offers a promising new direction for enhancing how we interact with technology. Conversational interfaces leverage the power of natural language processing and artificial intelligence to create more human-like interactions, providing users with a more intuitive and engaging experience.

Digital assistants like Siri, Alexa, and Google Assistant have been trailblazers in the realm of conversational user interfaces, offering users a glimpse into the potential of natural language-based interactions. These voice-activated assistants revolutionized how we interact with technology, enabling users to perform tasks, access information, and control smart devices using spoken commands.

While these digital assistants made significant strides in the early development of conversational interfaces, they also faced some limitations that have prevented them from becoming the predominant user interface today.

- Language Understanding: Digital assistants have historically struggled to comprehend complex or nuanced language, idiomatic expressions, and regional accents. This often resulted in misinterpretations and incorrect responses, which could lead to user frustration and a lack of trust in the technology.

- Context Awareness: Early digital assistants lacked the ability to maintain context across multiple interactions or understand the user’s intent in ambiguous situations. This made it difficult for users to engage in natural, fluid conversations and limited the assistants’ utility in more complex tasks.

- Limited Customization: Out-of-the-box digital assistants often come with predefined capabilities and a limited range of supported services. This constrained their ability to cater to individual user preferences, adapt to different domains, or integrate seamlessly with third-party applications.

- Privacy Concerns: Using voice-activated assistants raised privacy concerns, as the devices were always “listening” for the wake word. Users are worried about the potential misuse of their data or the risk of unintended eavesdropping.

- Device Dependence: Digital assistants were often tied to specific devices or platforms, limiting their accessibility and utility for users with different hardware or software ecosystems.

- Human-Machine Interaction: Another factor influencing the adoption of digital assistants was the social aspect of talking to a machine. Many users felt awkward or self-conscious when using voice commands in public or around others, inhibiting the widespread adoption of the technology. Additionally, some users found it difficult to adjust to the idea of engaging in conversations with a machine, as they were accustomed to traditional interfaces.

Despite these limitations, digital assistants like Siri, Alexa, and Google Assistant have played a vital role in popularizing conversational interfaces and demonstrating their potential.

The Next Frontier: Advanced Conversational AI

At the heart of the CUI revolution are conversational AI engines like ChatGPT, which employ advanced language models to understand, process, and respond to user input in a contextually relevant manner. This enables CUIs to engage in dynamic, two-way conversations with users, breaking down the barriers between humans and computers.

The potential applications of CUIs are vast, ranging from virtual assistants and customer support to more complex tasks like software development and data analysis. By allowing users to communicate with technology using natural language, CUIs can simplify complex workflows and empower users to accomplish tasks more efficiently.

CUIs can also be pivotal in enhancing accessibility for diverse users, including individuals with visual impairments or motor disabilities, who may struggle to interact with traditional GUIs. By providing an alternative mode of interaction based on voice or text input, CUIs can make technology more inclusive and user-friendly.

However, the development of effective CUIs is not without challenges. Designers must account for the nuances of human language, including idiomatic expressions, cultural differences, and the ever-evolving nature of language itself. Additionally, striking the right balance between AI assistance and human control is crucial to ensure that CUIs enhance user experiences without undermining user autonomy or privacy.

As conversational AI engines like ChatGPT continue to advance, the potential of conversational user interfaces as the next frontier in human-computer interaction becomes increasingly evident. By harnessing the power of natural language processing and artificial intelligence, CUIs promise to reshape our relationship with technology, fostering more intuitive, accessible, and engaging interactions for users across the digital landscape.

Our last word

The convergence of various user interfaces with AI-powered conversational interfaces has the potential to revolutionize the software development industry by ushering in a new paradigm of human-computer interaction. By bridging the gap between traditional GUIs and the power of natural language processing, this hybrid approach can unlock new possibilities for software developers and end-users alike. Leveraging the strengths of each UI type and combining them with advanced conversational AI, developers can create more intuitive, accessible, and efficient user experiences.

Embracing this new paradigm is crucial for any company. It can lead to improved user satisfaction, enhanced productivity, and the ability to cater to a broader range of users with diverse needs and abilities. By staying at the forefront of this UI revolution, industry leaders can ensure their organizations remain competitive and innovative in an ever-evolving digital landscape.